If you’re paying $200 a month for ChatGPT Pro or Claude, it’s becoming harder to ignore the alternatives.

China’s Zhipu AI released GLM-4.7 on December 22 — an open-source AI coding model that is matching several premium competitors on key benchmarks while costing $3 per month, or free if you run it locally.

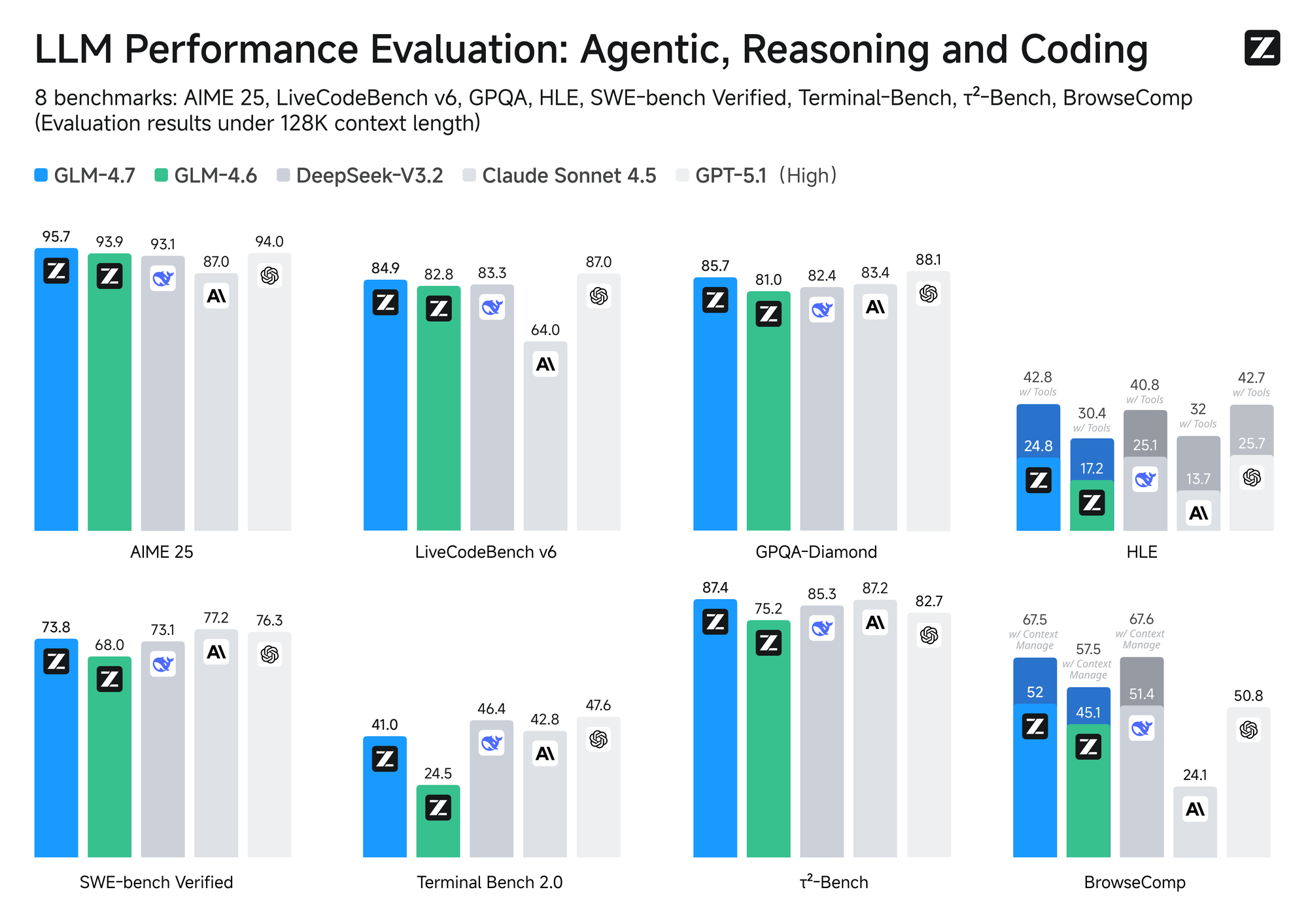

The claim sounds bold, but the numbers back it up. On LiveCodeBench, which tests real-world coding ability, GLM-4.7 scored 84.9%, ahead of Claude Sonnet 4.5. On SWE-bench Verified, which measures how well models fix real GitHub issues, it reached 73.8%, the highest among open-source models. On the AIME 2025 mathematics benchmark, it hit 95.7%.

These are not marginal gains. They are performance gaps that raise serious questions about the value of $200-a-month subscriptions for developers who mainly need help with coding.

Memory That Actually Works

Here’s where things get interesting. Most AI coding assistants lose context mid-conversation. You’re deep into debugging and suddenly the model asks you to re-explain your project.

GLM-4.7 introduces what Zhipu calls “Preserved Thinking” — the ability to maintain reasoning chains across multiple turns instead of resetting. For developers using agentic tools like Claude Code or Cline, this targets one of the biggest frustrations in AI-assisted coding: constant repetition.

On Humanity’s Last Exam, which measures complex reasoning with tool use, GLM-4.7 scored 42.8%, a 41% improvement over its predecessor and ahead of GPT-5.1 on that specific test. On τ²-Bench, which focuses on multi-step tool usage, it reached 87.4%.

Benchmarks don’t tell the whole story, but they suggest something practical is happening here — not just marketing.

Hardware Requirements and Trust Questions

There’s no free lunch.

Running GLM-4.7 locally requires serious hardware: datacenter GPUs or multi-GPU consumer setups. For most developers, the realistic option is the $3-a-month cloud access at chat.z.ai or API usage.

Then there is the trust issue. Chinese AI labs have been aggressive about benchmarks in the past. DeepSeek’s January breakthrough and Baidu’s ERNIE models both promised disruption. Some delivered. Others didn’t hold up under real-world use.

Early feedback on GLM-4.7 is cautiously positive. Developers report:

- Cleaner HTML output

- Better UI proportions

- Fewer context-loss errors compared to GLM-4.6

But it has been live for less than 48 hours. The real test is not leaderboard performance — it is how it behaves inside real production workflows over time.

Why This Launch Matters Beyond the Model

The competitive dynamic is shifting.

OpenAI, Anthropic, and Google spent 2025 normalising $200-a-month subscriptions, framing premium AI as the cost of doing serious work. GLM-4.7 challenges that assumption by closing the gap on core coding tasks without the subscription wall.

For startups watching burn rates and solo developers priced out of top tiers, that is a meaningful change.

Zhipu’s timing is deliberate. It launched GLM-4.7 alongside its Open Platform, offering global API access with Python and Java support just as companies plan tooling budgets for 2026. If reliability holds, this could force a repricing across the industry.

Is Premium AI Still Worth It?

This is what it really comes down to.

If your team needs enterprise-grade uptime, legal guarantees, and full support contracts, premium subscriptions still make sense. But if you are a solo developer or a small team willing to trade some convenience for cost control, GLM-4.7 has become a real alternative.

The weights are already available on Hugging Face and ModelScope. Access via chat.z.ai starts at $3 a month.

The next three months will decide everything. If GLM-4.7 survives real developer use without major issues, the AI coding market is about to get far more competitive — and far cheaper.