They say you shouldn’t build a house with someone else’s bricks, but that’s exactly what OpenAI is being accused of.

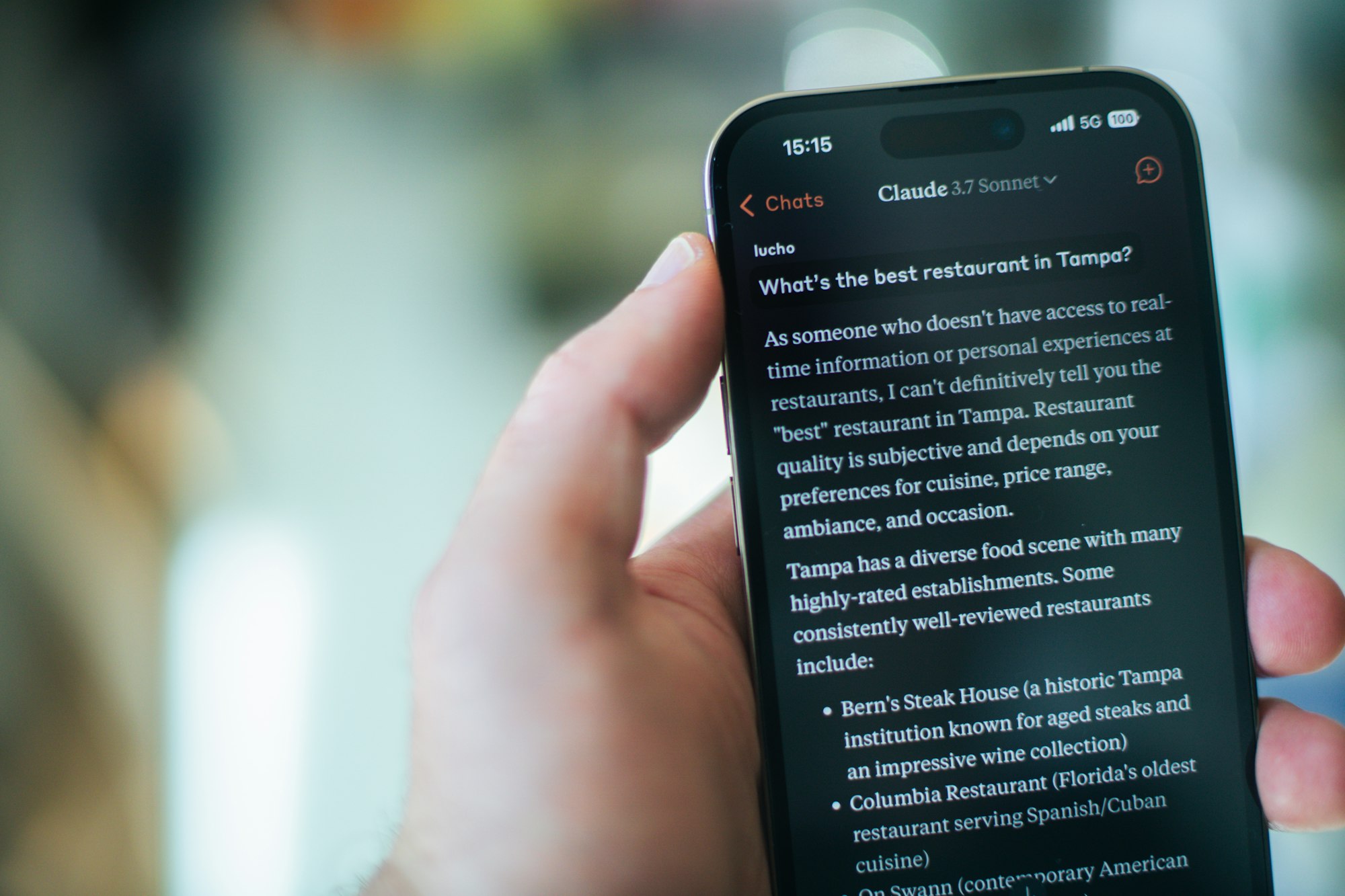

Anthropic has reportedly cut off OpenAI’s access to its Claude API, after discovering it was being used in ways that go beyond casual testing. According to Wired, OpenAI was using Claude’s internal tools, beyond just the typical chatbot, to assist in training and evaluating GPT-5, its next big model set to launch this August.

Behind the scenes, OpenAI engineers were said to be testing Claude’s performance on tasks like coding, creative writing, and safety prompts, then using that data to fine-tune GPT-5. The idea was to see how their model stacked up and make sure it could do better.

That didn’t sit well with Anthropic. Its commercial terms explicitly forbid using Claude for training or developing rival AI models. So, access was shut off. OpenAI pushed back, saying it was following an industry norm. Most AI companies, they argued, test their models against each other. Still, they admitted they were disappointed, especially since Anthropic still has access to OpenAI’s own API.

Things like this have been bubbling for a while now. In June, Anthropic also revoked API access for Windsurf, a company rumoured to be getting acquired by OpenAI, just in case it was being used to feed the competition. The company has since tightened rate limits to curb resale and unauthorised sharing.

Interestingly, OpenAI has made similar accusations of its own. Earlier this year, it called out Chinese AI startup DeepSeek for using OpenAI’s systems and data to train its own models.

At the end of the day, this kind of back-and-forth just shows how fragile access can be in the AI space. One day you're using a tool to test your model, the next you're locked out.

For anyone working with these platforms, it’s a reminder to tread carefully as the rules are more than just technical, they’re territorial too.