AI is reshaping industries across the digital economy—including music, a domain long defined by human expression. Among musical genres, rap poses a unique challenge for AI: it combines dense lyricism, rhythmic precision, and stylistic delivery, all tightly aligned with beat structures.

Beyond creative experimentation, AI-powered rap systems show how machine learning can generate structured artistic output by modelling language, timing, and voice in sync.

Table of Contents

- Core Technologies Enabling AI Rap

- How AI Generates Rap: A Technical Overview

- Applications Across Creative Fields

- Ethical, Cultural, and Legal Considerations

Part 1: Core Technologies Enabling AI Rap

Language Models Beyond Meaning

Rap lyrics are differ from typical text. They’re full of wordplay, internal rhymes, and broken syntax, often crafted to fit the beat.

To replicate this, AI rely on advanced text generation models trained specifically on rap lyrics. These models don’t just look at what words mean—they learn how words sound together, how syllables fall, and where emphasis lands. That’s how they create lines that both rhyme and flow.

Synthetic Voices with Flow

Writing lyrics is only part of the job—AI must also perform them. Rap requires style: tone, pacing, and delivery. To do this, AI music models simulate human speech, tuned to mimic different rap styles. Trained on thousands of samples, they capture rhythm, emotion, and vocal attitude.

Timing That Syncs with the Beat

Even great lyrics fail if they miss the beat. AI systems must grasp musical structure—tempo, bars, and drum patterns—so lyrics align rhythmically.

Each syllable is mapped to precise musical moments, ensuring the verse lands with the beat. This timing is what makes AI rap feel authentic.

Part 2: How AI Systems Generate Rap: From Input to Output

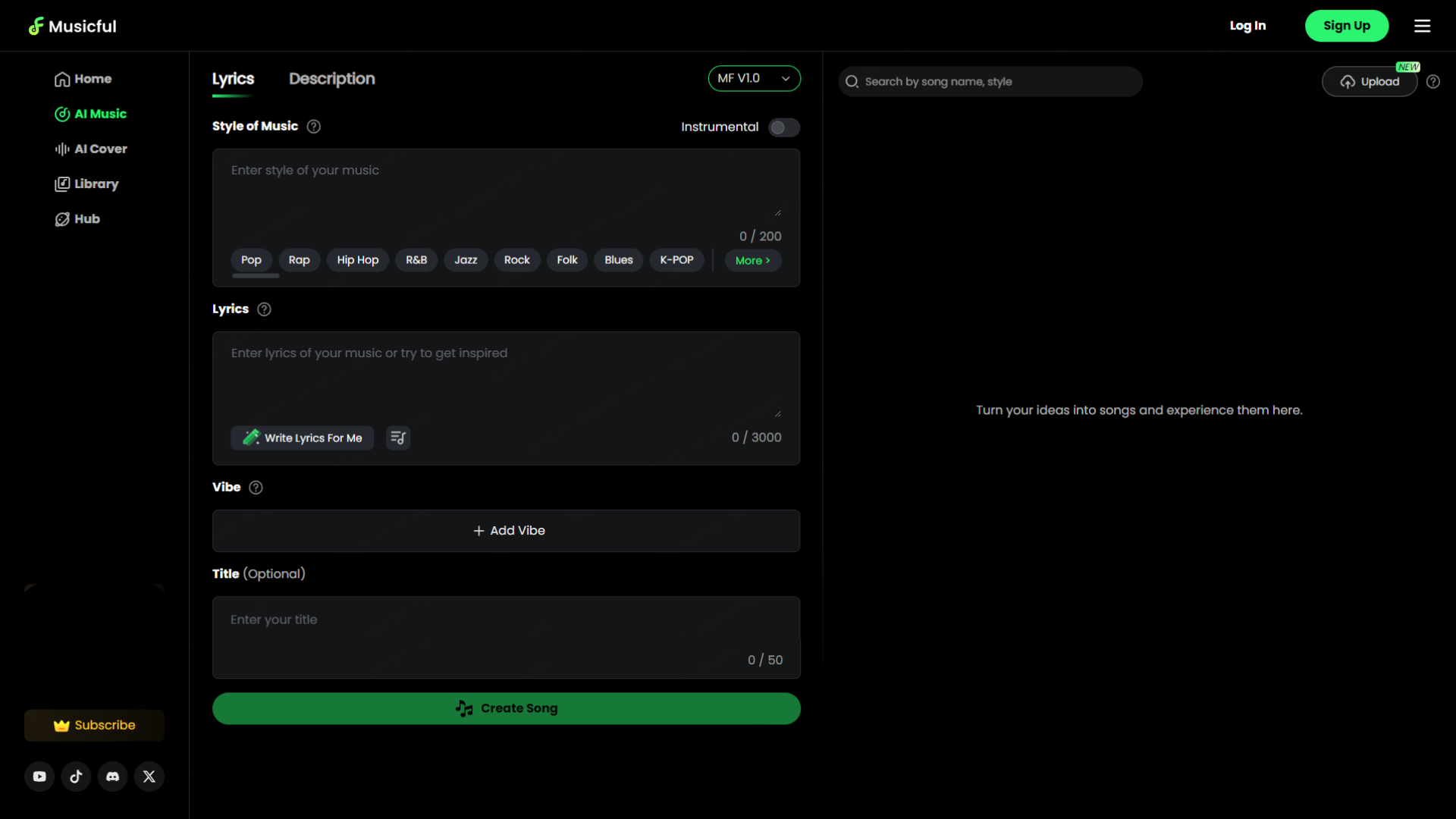

Most AI rap platforms operate by accepting one of three input types—lyrics, prompts, or voice—and generating rap tracks in seconds. Musicful (https://www.musicful.ai/ai-music-generator/) is one such platform that illustrates these workflows in practice.

Lyric-to-Rap Creation

In text-based mode, users input original lyrics. The system parses the structure—identifying line breaks, rhymes, and syllabic stress—and maps the content onto a beat framework. Musicful supports this by letting users define genre and flow characteristics, generating matching vocal performances and instrumentals in response.

Prompt-Based Generation

When no lyrics are provided, platforms like Musicful interpret natural language prompts—such as "dark drill with fast flow"—to generate the lyrical content, vocal tone, and instrumental backdrop. These prompts are converted into latent stylistic representations, which inform the system’s multi-modal output.

Voice-Initiated Output

Some platforms also support voice-based input. Musicful, for example, allows users to upload short spoken or hummed recordings. The system analyses timing, pitch, and energy, then constructs a rap around the input using AI-based vocal rendering and beat construction.

Note:

Their underlying architecture shares a common goal: mapping user intent—whether written, spoken, or described—into synchronised musical output. Musicful (https://www.musicful.ai/) exemplifies this multi-input design, offering users different pathways to engage with AI rap creation depending on their preferences or experience.

Part 3: Applications Across Creative Fields

AI rap has moved beyond traditional music production. Its fast turnaround and flexible formats now serve various creative and commercial uses.

Content creators now use AI-generated rap in YouTube intros, podcast jingles, and social media content — saving time and bypassing licensing delays.

In game development explore adaptive AI rap for dynamic dialogue and context-aware soundtracks, adding musical interactivity without voice actors.

Marketers leverage customized rap content for campaigns, especially those targeting youth or subcultures. Genre-aligned tracks tied to keywords offer new sonic branding formats.

Educators uses rhythm-driven rap formats. AI systems can make learning more memorable, especially in language learning and STEM outreach.

Independent creators like filmmakers and streamers turn to AI for affordable, original music—intros, soundtracks, and background audio—without studio access or licensing costs.

What unites these applications is a shared demand for rapid, scalable audio generation—especially when turnaround time, customization, or licensing complexity would otherwise be prohibitive.

Part 4: Ethical, Cultural, and Legal Considerations

Despite its technical appeal, AI-generated rap raises several unresolved ethical and legal questions—many of them specific to the genre’s cultural context.

Cultural authenticity remains a core concern. Rap music has deep roots in African American history, and many fear that automated systems trained on these traditions risk flattening or misrepresenting their cultural significance. Critics warn that AI may unintentionally reproduce stereotypes or commodify identity without proper context.

Copyright and data provenance present additional issues. Many models are trained on large corpora of existing lyrics and performances, some of which are protected intellectual property. This introduces legal ambiguity around fair use, especially if generated content closely resembles source material.

There is also the matter of attribution. When an AI model generates lyrics or flows influenced by thousands of artists, who deserves credit? Most platforms assign full ownership to the end user, but this approach sidesteps deeper questions of derivative creativity and artistic contribution.

Finally, concerns have been raised about content saturation. As barriers to music creation fall, there’s a risk of platforms being overwhelmed with low-effort or repetitive tracks—making it harder for original human work to stand out. Some argue that discovery algorithms, platform policies, or even metadata standards may need to adapt to this influx.

As AI rap systems gain traction, these debates are likely to shape how the technology is regulated, distributed, and evaluated—not just as a tool, but as a participant in a historically rich creative ecosystem.

Conclusion

AI rap is moving from experimental to applied, powering real-time content creation across music, gaming, and media. Its next phase will centre on interactive control, cross-modal integration, and modular tools that enhance—not replace—human creativity.

For technologists, AI rap highlights both the strengths and limits of generative systems in culturally complex domains. As language, rhythm, and identity converge, thoughtful design will determine its future.