You’ve probably hesitated before asking an AI a difficult question. Not because you didn’t know what to ask, but because you knew the answer might depend on which model you were using. The fast one responds instantly but cuts corners. The smarter one thinks longer, but breaks your flow.

That trade-off has shaped how people use AI for years. Speed came at the cost of accuracy. Reliability came with waiting. Now, Google is trying to erase that choice. This week, the company began rolling out Gemini 3 Flash as the default model for billions of users worldwide.

The technical reason this trade-off existed was simple. Deep reasoning requires more compute, and more compute takes time. Previous fast models like Gemini 1.5 Flash and Gemini 2.5 Flash were optimized for responsiveness by cutting verification steps. They answered quickly, but often guessed, skipped checks, and occasionally hallucinated. They were useful, but unreliable.

Gemini 3 Flash breaks that pattern. According to Google’s own benchmarks, the model scored 90.4% on GPQA Diamond, a test designed to measure PhD-level scientific reasoning. That’s not typical fast-model performance. On MMMU Pro, which evaluates how well an AI understands text, images, and context together, it scored 81.2%. On SWE-bench Verified, a demanding coding benchmark, it reached 78%, matching or even outperforming heavier models in Google’s lineup.

Those numbers matter less on their own than what they unlock. Tasks that once forced mode-switching no longer do. Legal document analysis can happen at conversational speed. Complex architectural coding decisions don’t require waiting. Scientific reasoning moves as quickly as casual questions. Instead of treating every prompt the same, Gemini 3 Flash promises to dynamically scale how much reasoning it applies based on what you ask.

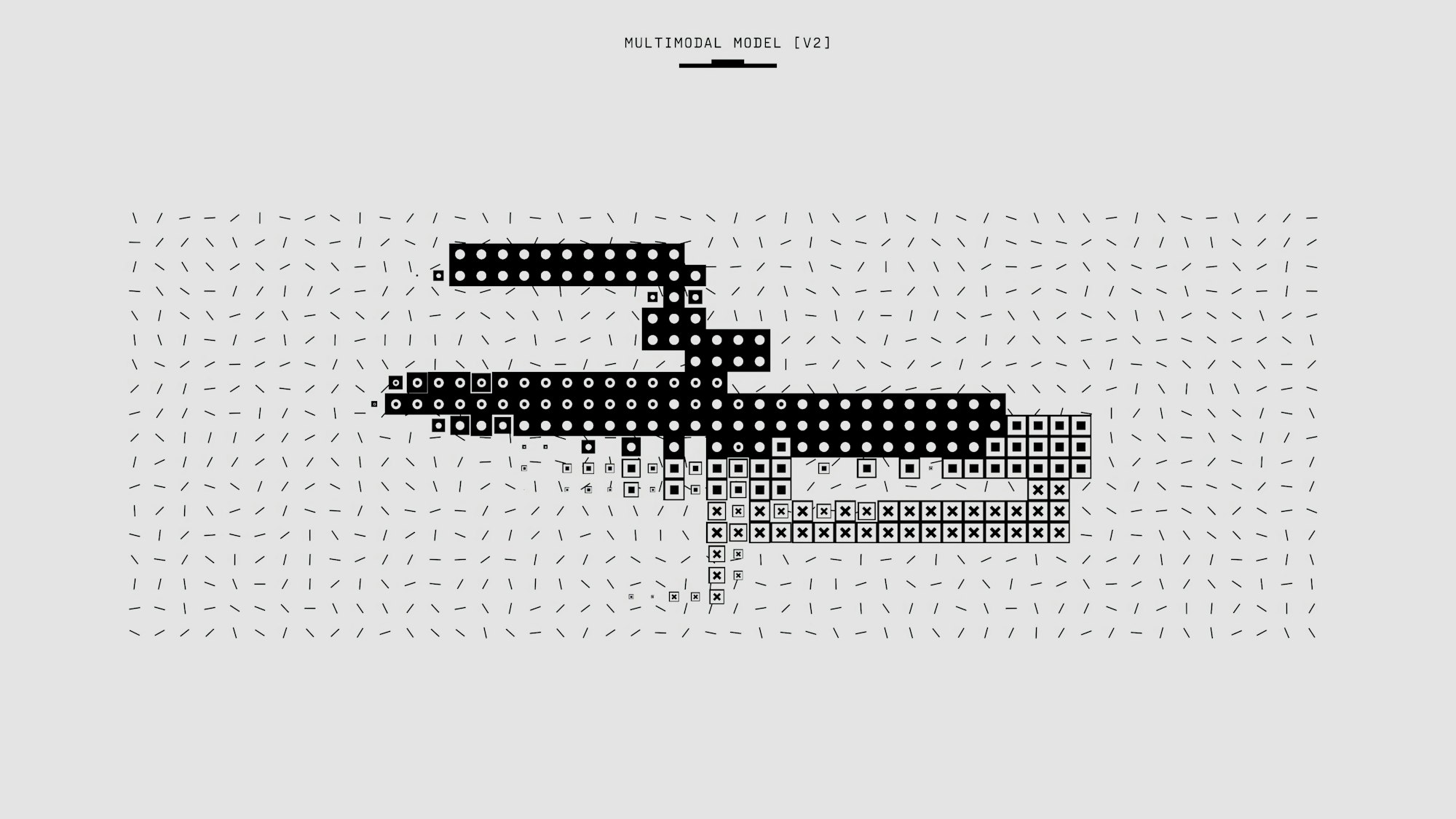

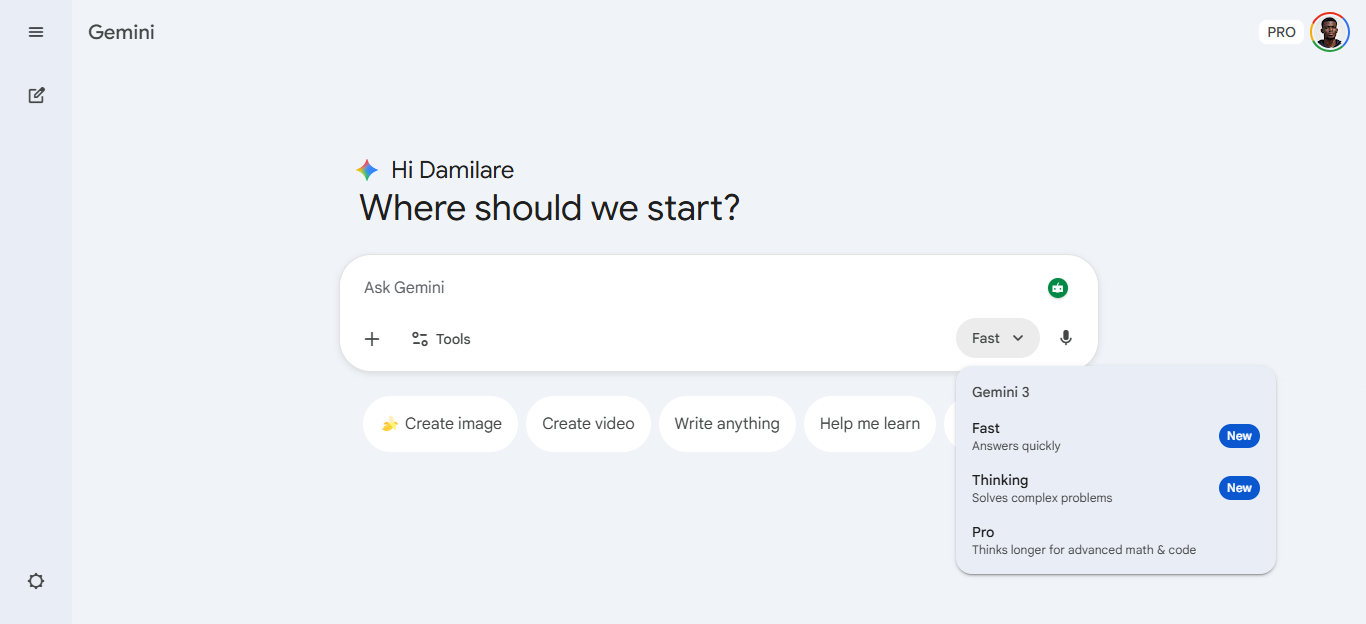

That approach shows up clearly in how Google now positions the Gemini app. There are three modes. Fast runs Gemini 3 Flash. Thinking uses the same model but allows extended reasoning for especially complex problems. Pro switches to Gemini 3 Pro for the heaviest tasks. The difference is that the gap between them has narrowed so much that most users may rarely need to leave Fast at all.

Companies are already acting on that assumption. JetBrains, Figma, and Bridgewater Associates say they're using Gemini 3 Flash in production workflows that demand both speed and reliability. Since Google launched the Gemini 3 family in November, it says it has processed over one trillion tokens per day through its API. That kind of scale only works if the default option is dependable without constant intervention.

For developers, Gemini 3 Flash is priced at $0.50 per million input tokens and $3 per million output tokens. For everyday users accessing it through the Gemini app or AI Mode in Search, it’s free.

The timing for this update is hard to ignore. Gemini 3 Flash began rolling out just days after OpenAI launched GPT-5.2. Earlier reports suggested internal concern at OpenAI after ChatGPT traffic dipped while Google’s share grew. The AI race has evolved from "who builds the smartest model" to "who eliminates the wait time without sacrificing intelligence." OpenAI is pushing reasoning depth with models like o3. Google is betting most people would rather have 90% of that intelligence delivered instantly.

Here's what actually changes: that hesitation before hitting send on a complex question disappears. You stop second-guessing whether the fast model can handle it; you just ask. And for most tasks, you'll get an answer that would have required the "thinking" model a month ago, delivered before you've finished reading the first line.

That shift matters more than the benchmarks suggest. When you trust the default option, you use AI differently. You ask harder questions. You iterate faster. You stop treating it like a tool you need to carefully configure and start treating it like a conversation. Whether Google intended it or not, making fast mode genuinely capable might be the thing that finally makes AI feel natural rather than technical.