The current state of enterprise computing often feels like a rigid house. You can upgrade the furniture (faster chips, bigger GPUs, more memory), but the fixed walls (server boundaries) restrict how you use the space. As AI, cloud services, and real-time analytics demand massive, inconsistent resources, this traditional, fixed-architecture approach is hitting this limit where they can’t scale a single resource without buying an entirely new, expensive, and often underutilized server.

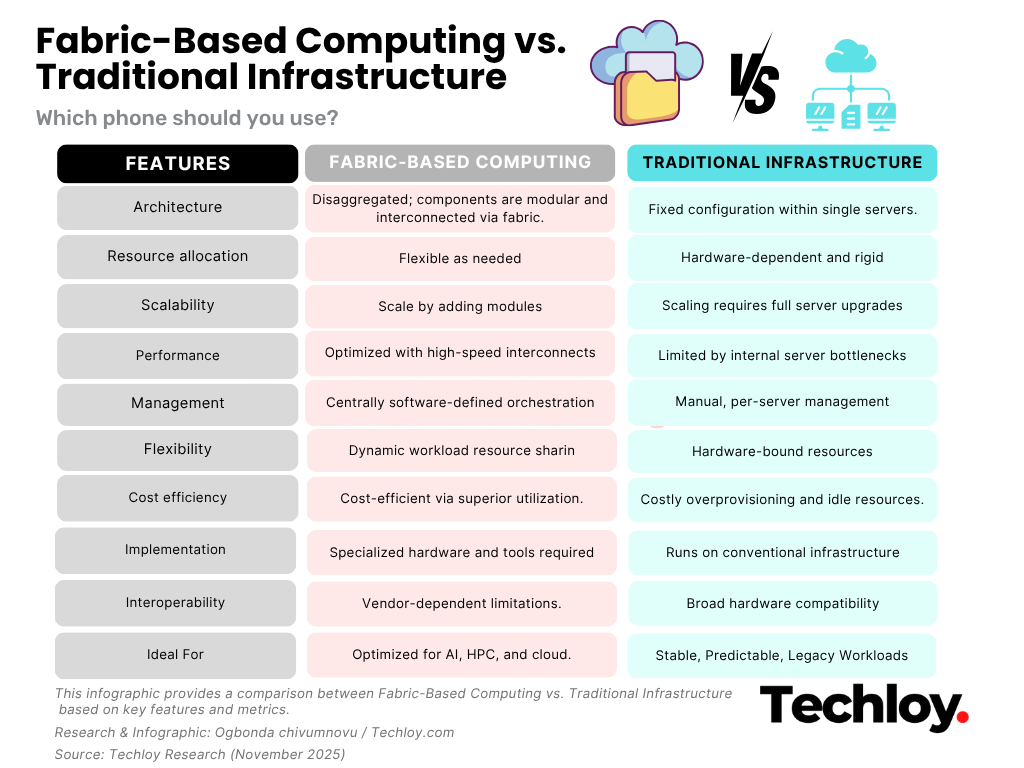

Fabric-based computing tries to break out of that fixed layout. Instead of bundling compute, memory, storage, and networking into discrete, fixed servers, FBC disaggregates them and connects everything via a high-speed, low-latency fabric. All the resources sit in a shared pool, and applications can pull what they need and give it back when they’re done. Nothing is stuck in one place, the system reshapes itself on the go.

What Is Fabric-Based Computing?

At its core, fabric-based computing (FBC) is an architectural approach where traditional hardware components are decoupled and interconnected through a high-speed, low-latency fabric. This fabric acts like a central nervous system, allowing resources to be dynamically pooled, provisioned, and reassigned without tearing down or rebuilding systems.

The result is a computing environment where hardware stops behaving like physical boxes and starts behaving like a fluid, software-defined world.

Components of Fabric-Based Computing

The magic happens because each component works independently but remains interconnected. Every component plays a clear role, these are the pieces that make the architecture work:

- Compute nodes

These are CPU or GPU units that no longer come bundled with local memory or storage. They attach to the fabric and can be assigned to any workload. Disaggregation prevents idle compute from sitting unused. - Memory modules

Memory becomes a shared pool rather than something tied to each server. This improves efficiency, since available memory can be assigned wherever demand spikes. - Storage systems

All storage sits behind the fabric instead of being attached to individual servers. Centralising it improves availability and gives any compute node fast access without local bottlenecks. - Fabric interconnect

This is the fast communication layer that keeps the system functioning. Technologies such as InfiniBand, PCIe, and advanced Ethernet enable low-latency transfers between disaggregated components. - Network interface modules

These handle traffic between the fabric and external systems. They keep workloads reachable and ensure data moves smoothly in and out of the cluster. - Management and orchestration software

This is the control room. It provisions, monitors, and scales resources in real time. Automation tools and APIs help administrators move workloads around without touching physical hardware. - Virtualisation layer

Virtualisation creates the abstraction that makes all this flexibility work. Workloads can run on virtual machines, containers, or bare-metal setups, independent of where the actual hardware sits.

How Fabric-Based Computing Works

The workflow centres on the fabric acting as a shared highway between disaggregated components. When an application starts, orchestration software assigns whatever compute, memory, or storage is needed by drawing from each pool. Because the interconnect delivers such low latency, a compute node can treat remote memory or storage almost as if it were local. Once the workload scales up or winds down, these resources are reassigned, improving overall utilisation. This elastic approach suits environments where demand isn’t consistent, like AI training runs, multi-tenant cloud services, big data processing, or HPC tasks that spike suddenly.

Why Fabric-Based Computing is Important

The shift is happening because the old way of building computers simply can’t keep up anymore. Today’s workloads pull in every direction at once: AI models need huge memory bandwidth, cloud apps spike without warning, and real-time services rely on near-instant responses. Traditional hardware wasn’t designed for this level of constant stress or unpredictability.

Fabric-based computing matters because it removes those bottlenecks. It lets memory, compute, and storage flow across a shared pool instead of being locked inside individual machines. That means faster scaling, fewer performance chokepoints, and far better use of existing hardware.

In simple terms, FBC keeps modern workloads running smoothly without forcing companies to endlessly add more servers.

Benefits and Challenges of Fabric-Based Computing

The promise of FBC lies in efficiency and adaptability. By pooling resources instead of binding them to physical servers, data centres can reduce idle hardware and lower long-term operational costs. Scaling becomes easier, and workloads can be deployed or rebalanced with minimal delay because most changes happen through software rather than hardware swaps. Fault tolerance also improves since failures affect components, not full servers, making recovery smoother.

At the same time, the architecture introduces complexity. Setting up a fabric-based environment requires new ways of thinking about infrastructure, along with high-performance interconnects that can be costly at the start. Many solutions rely on proprietary technologies, which creates concerns around vendor lock-in. Orchestration tools must be sophisticated enough to manage disaggregated resources efficiently, and because components are more distributed, securing communication paths becomes even more important. The lack of universal standards also makes integrating legacy systems more challenging.

The Future of Fabric-Based Computing

As data centres evolve to support AI workloads, real-time analytics, and increasingly distributed applications, the appeal of flexible and scalable architectures grows. Fabric-based computing fits neatly into that future.

Emerging technologies like CXL (Compute Express Link) allow memory and accelerators such as GPUs to be shared across servers at near-local speeds, while next-generation PCIe provides high-bandwidth, low-latency connections between compute, storage, and networking modules. Together, these innovations make it easier to move resources dynamically and reduce the bottlenecks that traditional servers face. Improved orchestration platforms are also simplifying deployment, allowing companies to manage complex, disaggregated infrastructure with less manual intervention.

Over time, FBC could become central to next-generation cloud systems and large enterprise environments, where efficiency, performance, and responsiveness are crucial.

Conclusion

Fabric-based computing is a response to the limits of conventional servers in a world dominated by AI, big data, and real-time applications. By separating and pooling compute, memory, storage, and networking, FBC allows resources to be allocated precisely where and when they are needed. This leads to better efficiency, higher resilience, and faster scaling without constant hardware expansion.

Whether it becomes the dominant model or remains a specialized solution, FBC represents a shift toward a more adaptable, responsive computing infrastructure. It signals a move from rigid, one-size-fits-all servers to systems that can bend and stretch to meet the demands of modern workloads, making the future of data centres more fluid, efficient, and capable than ever before.