The ChatGPT creator, OpenAI, is no longer just focused on building smarter models; it’s now in full defence mode. What was once a collaborative research culture has evolved into something closer to a classified defence operation, all sparked by a serious scare earlier this year.

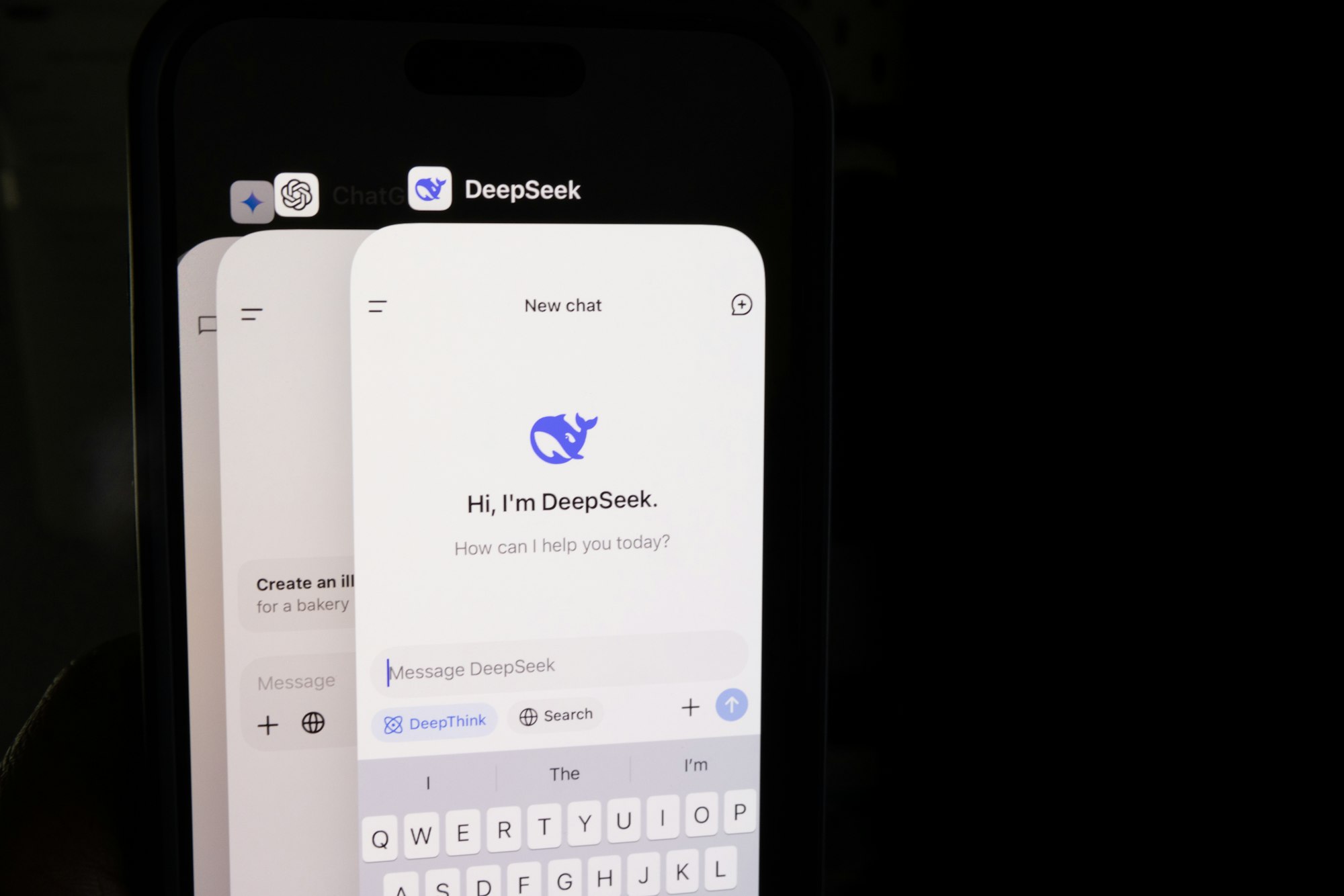

Per the Financial Times, the tipping point came when Chinese startup DeepSeek released a language model that looked eerily similar to OpenAI’s. The suspicion was that DeepSeek trained its model using a technique called “distillation,” essentially copying OpenAI’s outputs to recreate its performance without accessing any source code. It’s like learning a language by mimicking someone else’s conversation and doing it well enough to pass for fluent.

Internally, this set off alarm bells. OpenAI quickly launched what insiders describe as “information tenting,” a new protocol where only cleared employees can even mention certain projects. During the development of its o1 model, nicknamed “Strawberry,” engineers had to double-check clearance before discussing it in the break room. If you weren’t on the list, you were out of the loop.

And the walls didn’t stop there. OpenAI has moved key systems offline, introduced fingerprint-based access to sensitive spaces, and implemented a “deny-by-default” internet policy. Even internal web access needs special approval. Security, once an afterthought, is now baked into the company’s daily operations.

Leadership changes mirror the shift. OpenAI has brought on heavyweights like Dane Stuckey, former CISO at Palantir, and retired U.S. General Paul Nakasone, now on the board with a mandate to reinforce cybersecurity. VP Matt Knight is also leading efforts to test defences using OpenAI’s own AI tools.

This shift is more than a precaution; it’s a response to real-world threats. DeepSeek isn’t just another rival; it represents the growing pressure from global players to catch up by any means necessary. And as U.S. officials continue sounding alarms over foreign tech espionage, especially in China, OpenAI seems determined to stay a step ahead.

While rivals like Anthropic and Meta maintain more open development cultures, OpenAI is heading in the opposite direction, betting that secrecy and security are now essential to keep its edge in the AI arms race.