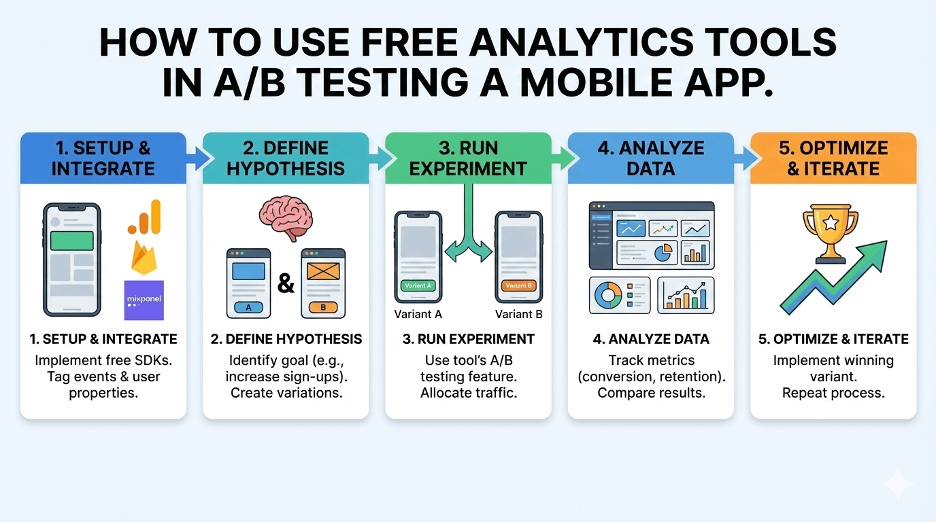

A/B testing is one of the most efficient approaches to optimizing the work of mobile apps, but many developers suppose that it is costly because analytics platforms must be bought. Multiple free app analytics tool options provide robust A/B testing capabilities that help teams make data-driven decisions without subscription costs eating into limited budgets.

The following guide will follow a series of steps towards how one may implement A/B tests using available tools in a practical way that results in actionable improvements to user behavior analysis.

Learn the Basics of A/B Testing in Mobile Apps

A/B testing will be used to compare two variants of a certain app element and arrive at a conclusion about which one works better than the other. Regardless of whether it is the onboarding process, feature placement, or pricing models, the approach is the same: divide users into groups, apply each to various variants, and see which one leads to better results.

The problem is not in knowing the concept but in applying the tests in a well-managed manner that does not require many resources. Free analytics tools make this process more democratic, allowing conversion rate optimization to be made available to both indie developers and large publishers.

The major components that any mobile A/B test needs:

- A definite hypothesis on what will be improved through change.

- Clear success measures that were in line with business objectives.

- Adequate traffic is required to have statistically significant results.

- Biased avoidance through proper user segmentation.

- Infrastructure to monitor pertinent events.

In the absence of these basics, even advanced app analytics tools will yield unreliable information that is used to make bad decisions.

Choose the Right Free Analytics Platform

Equal A/B testing is not available on all free analytics solutions. Others have a fundamental event tracking system functionality, even without in-built experimentation or specific testing modules in free tiers.

Google Analytics Firebase is unique due to mobile-specific considerations, such as the ability to track custom events of actions in the app. The platform automatically tracks the user engagement metrics and provides exploitation to the teams to create their own conversion events that fit their desired objectives.

The free version of Amplitude facilitates strong behavioral analytics, which can be useful, especially when attempting to grasp the user journey mapping of the complex app flows. It is also in the business of tracking cohorts, and therefore, the platform is particularly successful in the context of teams attempting to investigate the relation between various user groups and tested changes.

The free version of Mixpanel offers good funnel analysis features, which are important in determining points of abandonment at different stages of a process by users. This visibility is essential when experimenting with modifying the tutorials to enhance the rate of tutorial completion or user onboarding.

Set Up Your First A/B Test

Identify What to Test

Begin with an analysis of current application performance dashboards (regarding the friction points). The high rate of dropping off during the onboarding indicates that it is necessary to test various welcome flows. The low feature adoption rates represent the absence of understanding of the capabilities on the part of the user, and it is attributable to either UI tests or messaging tests.

Competitive intelligence offers more ideas for tests. When app competitor analysis tools reveal successful patterns across similar apps, testing whether those approaches work for your audience makes strategic sense. Being imitative, though without checking, is a wrong move most of the time, business-wise—what fleshes out among their users may not be the same as what users of your own do.

First preference should be on tests that have a huge impact. Sensitization of core metrics would be unimpressive, and conversely, a fundamental value proposition or prominence over features can have a catastrophic effect on the outcomes of the retention rate test.

Configure Tracking Events

Decent instrumentation distinguishes good tests from time wastage. Prior to introducing variations, make sure that the analytics platform records all the actions of the users. This involves screen flow analysis of movement between screens and session recording of information on the patterns of interaction.

Free platforms usually have restrictions on the volume of custom event tracking, and this makes selecting the event thoughtful.

Focus on tracking:

- Certain steps are associated with testing hypotheses.

- The milestone accomplishments signify growth.

- Exits that show the point of abandonment by users.

- Time-related analytics of the duration of engagement.

- Tracking of desired results on completion of goals.

Do not spy on the irrelevant vanity metrics. It is not as important to know how many screen views have been made because it is important to know whether the users have undertaken intended actions.

Split User Traffic

Most free app analytics tool implementations support percentage-based traffic splitting through feature flags or remote configuration. The classic method would place half the users in each variant, but unequal splits are effective in testing some potentially risky changes.

In the division of traffic, geographic allocation and tracking device compatibility are important. In case the users of iOS and Android behave differently, it is worth having a test on each platform instead of having an integration of their outcomes, which may blur certain platform trends.

The groups of comparison are fair because users are segmented. Random assignment should provide new users with similar experiences, and existing users may need special treatment so as not to be confused by the abrupt appearance of new features.

Measurement and Interpretation of Results

Decision of Statistical Significance

The tests that are run too short give misleading results. Even though specific periods depend on the flow of traffic, the average mobile testing in A/B formats requires at least two weeks of window time with diverse use trends of weekdays and weekends.

Statistical significance tests are used to check whether the differences that are observed are true improvements or accidental. Analytics platforms, in which statistics have no built-in significance testing, are complemented with free tools such as an online calculator by Evan Miller or online statistical significance tests.

See through key measures to the lesser ones that indicate unintended effects. An increase in conversion would damage retention rate measurement at the same time, as it would encourage users who would not be long-term customers.

Explore Patterns of User Behavior

Quantitative measures are used to tell what occurred; qualitative analysis is used to provide the reasons. Measures of session duration could be on the victory; however, to comprehend whether users appreciate the addition or just find it difficult to utilize this new feature, it is necessary to consider the calculation of the engagement rate as well as monitor the rate of error charting.

The free analytics platforms usually contain cohort tracking that illustrates the behavior of the test groups over the long term. One that develops more positive initial metrics and lower metrics of monthly active users implies an indication of short-term influx at the expense of long-term issues.

Behavioral analytics is especially useful when the tests give unforeseen results. When a hypothesis assumes Variant A to beat Variant B but findings are otherwise, it is common to dive into the patterns of user usage, and when assumptions are incorrect, there is usually a reason why.

Major A/B Testing Mistakes to Avoid

When numerous variables are tested at the same time, it is not possible to determine which changes produced the results. Multivariate testing attracts far more traffic than most of these apps have, so it is more realistic to single out sufficiently focused tests at the single-variable level in teams with enterprising independent traffic.

Another common mistake is the premature termination of tests. The initial results are usually dramatic, yet the means towards which the initial results revert as more data accretes. Slow innovation generates valuable information; rash choices made without all available information squander the development resources.

To avoid having defective tests, one should not overlook mobile-specific considerations. Monitoring of load time shows a different outcome in the types of connections in accordance with network performance. Speed-sensitive tests require the segmentation of the traffic based on the connection quality to generate practical information.

The other pitfalls to avoid:

- Tests when the motives are unusual (holiday, major events).

- Not taking into consideration differences related to the analysis of the distribution of OS.

- Failing to compile test parameters to be put on record.

- Leaving verification of tracking to be done actually pre-launch is neglect.

- The assumption of a correlation is that of cause and effect.

Conclusion

Free analytics offer all that is required to execute significant mobile A/B tests to make a measurable change. It is the design of tests with care and considerable thought, adequate measurement, and analysis of patients that leads to success, more than the use of sophisticated tools. When teams are given a clear hypothesis to test, a sufficient sample size, and rigorous interpretation, the teams will always create valuable knowledge, even on a small budget.

To teams who will go beyond the benign optimization to a competitive strategy, Appark.ai provides an extensive approach to mobile app insight. Through the platform, it is possible to see the best charts, compare competitor apps by category and region, and track market changes without monitoring them manually, and competitive knowledge can be translated into a competitive benefit.

Immerse yourself. Instead of creating a smart A/B test once and forgetting it, you should start with learning, metrics that matter, and the fundamentals to be smart today. Develop an experimental culture.