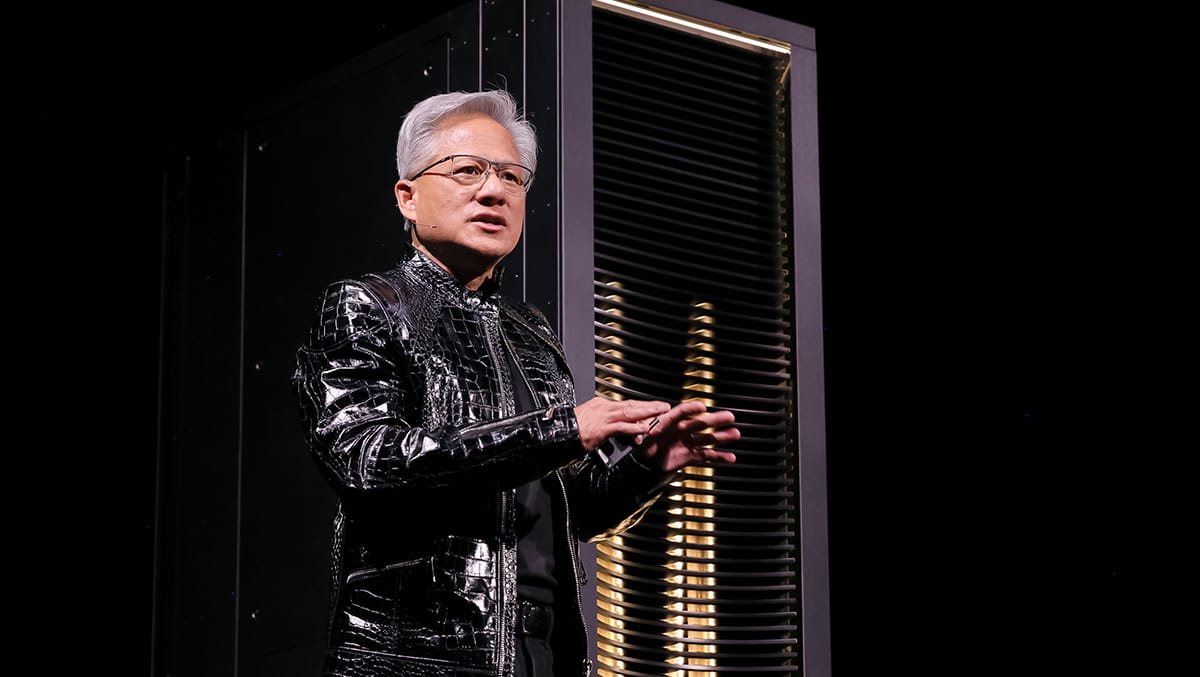

During its CES 2026 keynote on Monday, Jensen Huang, the CEO of the American GPU giant, NVIDIA announced big plans for AI adoption and innovation in 2026.

The company unveiled its Rubin platform, a complete redesign of its computing system. Huang says this new system is necessary because AI is growing so fast that older technology can’t keep up.

But the announcements went far beyond just faster chips. NVIDIA introduced a self-driving AI that can explain its decisions in real-time, a solution to the "data traffic jams" that slow down big AI systems, and upgraded gaming tech that delivers movie-quality graphics at incredible speeds.

Here are all the major announcements from NVIDIA’s CES 2026 keynote.

Rubin platform

The Rubin platform, named after astronomer Vera Rubin solves a major problem:, AI needs more power than chip makers can physically squeeze onto a standard chip.

“Models are increasing by a factor of 10 every year. Token generation is increasing 5x per year. We had no choice but to design every chip over again,” Huang told the Las Vegas audience.

The platform delivers 5x the performance of its predecessor (Blackwell) but only increases the physical transistor count by 1.6x. In simple terms, NVIDIA has found a way to make the chips significantly faster and more efficient without just making them five times larger or more expensive to manufacture—a feat achieved by redesigning all six chips simultaneously.

The breakthrough came from a new technology called NVFP4. Think of this as "smart math"—it dynamically adjusts how much detail the chip uses for calculations. It lowers the precision (using fewer data points) to speed things up when high detail isn’t needed, and dials it back up when it is.

The result is that training a massive 10-trillion parameter model now needs just one-quarter of the hardware previously required. This also drops token costs, the price companies pay to generate text or answers from AI to one-tenth of current levels.

Each Rubin rack contains 220 trillion transistors across 72 GPUs. The system uses 45°C liquid cooling which flows directly through the hardware. This allows data centres to eliminate expensive water chillers—the massive, power-hungry industrial air conditioners typically needed to keep server rooms from overheating.

Microsoft, AWS, Google Cloud, and CoreWeave will deploy Rubin systems starting in the second half of 2026.

BlueField-4

NVIDIA introduced a Context Memory Storage platform that adds 16 terabytes of fast memory per GPU, addressing what’s become a critical pain point for AI labs and cloud providers.

The issue: as AI models get smarter, they need to remember more information (working memory). When this memory gets too big for one chip, it has to be sent across cables to other servers. This creates a data "traffic jam," or bottleneck, slowing the AI down.

BlueField-4 data processing units store this memory directly in the rack (the physical cabinet where the servers are stacked). By keeping the data close to the chips, it moves at 200 Gbps, completely eliminating those network delays. Every bus in the platform is encrypted, making it “confidential computing safe” for deploying proprietary models on third-party infrastructure.

Alpamayo

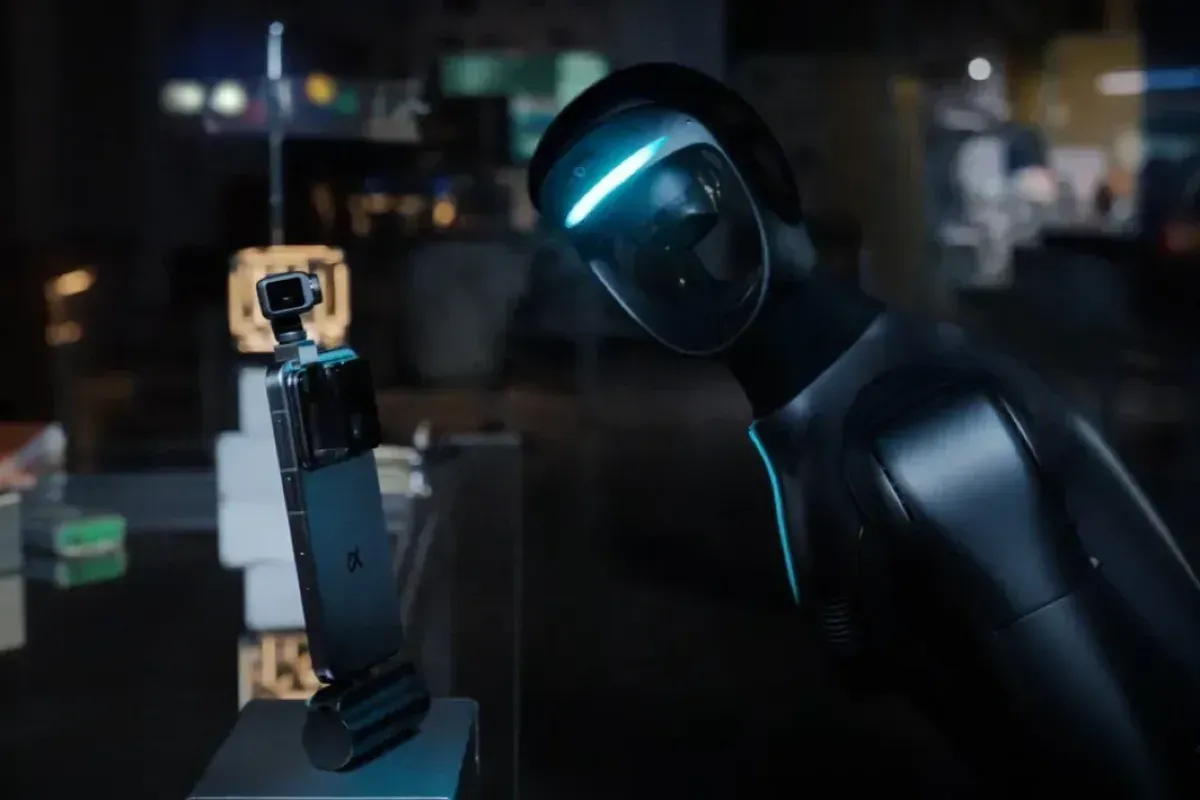

NVIDIA launched Alpamayo, a 10 billion-parameter model that doesn’t just react to driving scenarios—it breaks them down, explains its reasoning, and adapts to situations it’s never encountered.

The distinction matters. Traditional autonomous systems pattern-match against training data. Alpamayo reasons through problems.

“It’s impossible to collect every single possible scenario for everything that could ever happen in every country,” Huang said. “However, every scenario if decomposed into smaller scenarios are quite normal circumstances that the car knows how to deal with. It just needs to reason about it.”

The model runs alongside a traditional safety-certified AV stack. A policy evaluator continuously decides which system controls the vehicle: the reasoning AI handles normal driving, while the classical system takes over for rare or unpredictable situations that the AI might be less confident handling.

The 2025 Mercedes-Benz CLA ships CLA ships with the full NVIDIA AV stack in Q1 2026 for US customers, Q2 for Europe. Lucid Motors, Jaguar Land Rover, Uber, and Berkeley DeepDrive have already signed on to build this tech into their future fleets.

DLSS 4.5

NVIDIA announced the DLSS 4.5 with a second-generation transformer model for Super Resolution that delivers better temporal stability across 400+ games. This means that when playing a game, the picture stays smooth and clear even during fast action, with no annoying blinking or blurry edges. All GeForce RTX GPUs can use the upgraded upscaling now through the NVIDIA app.

Dynamic Multi Frame Generation, arriving spring 2026 for RTX 50 series GPUs, generates up to five additional frames between traditionally rendered frames. The system intelligently targets frame rates to match your monitor's refresh rate. This means the game only makes as many pictures as your screen can actually handle. This keeps the movement super smooth without making your computer work harder than it needs to.

Over 250 games now support DLSS 4 technology, with major 2026 titles including 007 First Light, Phantom Blade Zero, and Resident Evil Requiem adding support at launch.

GeForce NOW expands to Linux and Fire TV

NVIDIA is adding native GeForce NOW apps for Linux PCs (Ubuntu 24.04 and later) and Amazon Fire TV Stick 4K devices. Beta versions of the Linux app will roll out in early 2026, addressing a top community request.

The service also added support for flight sticks and racing wheels, with day-one streaming for upcoming titles including Resident Evil Requiem, 007 First Light, and Crimson Desert.

Open AI models and industrial partnerships

NVIDIA released a suite of open AI models and datasets focused on “physical AI” and autonomous systems, including models for self-driving vehicles (Alpamayo), robotics frameworks (Cosmos, Isaac GR00T), and large language models for agentic workflows (Nemotron 3 family).

These tools are intended to reduce inference costs and support development for humanoid robots and reasoning AI agents.