NVIDIA has effectively rewritten the rules of the AI ecosystem in a single, coordinated maneuver. On Monday, the chip giant debuted the Nemotron-3 family of open models and acquired SchedMD, the steward of the Slurm workload manager. On the surface, one is a model release and the other a corporate deal. In practice, they're two halves of the same strategy.

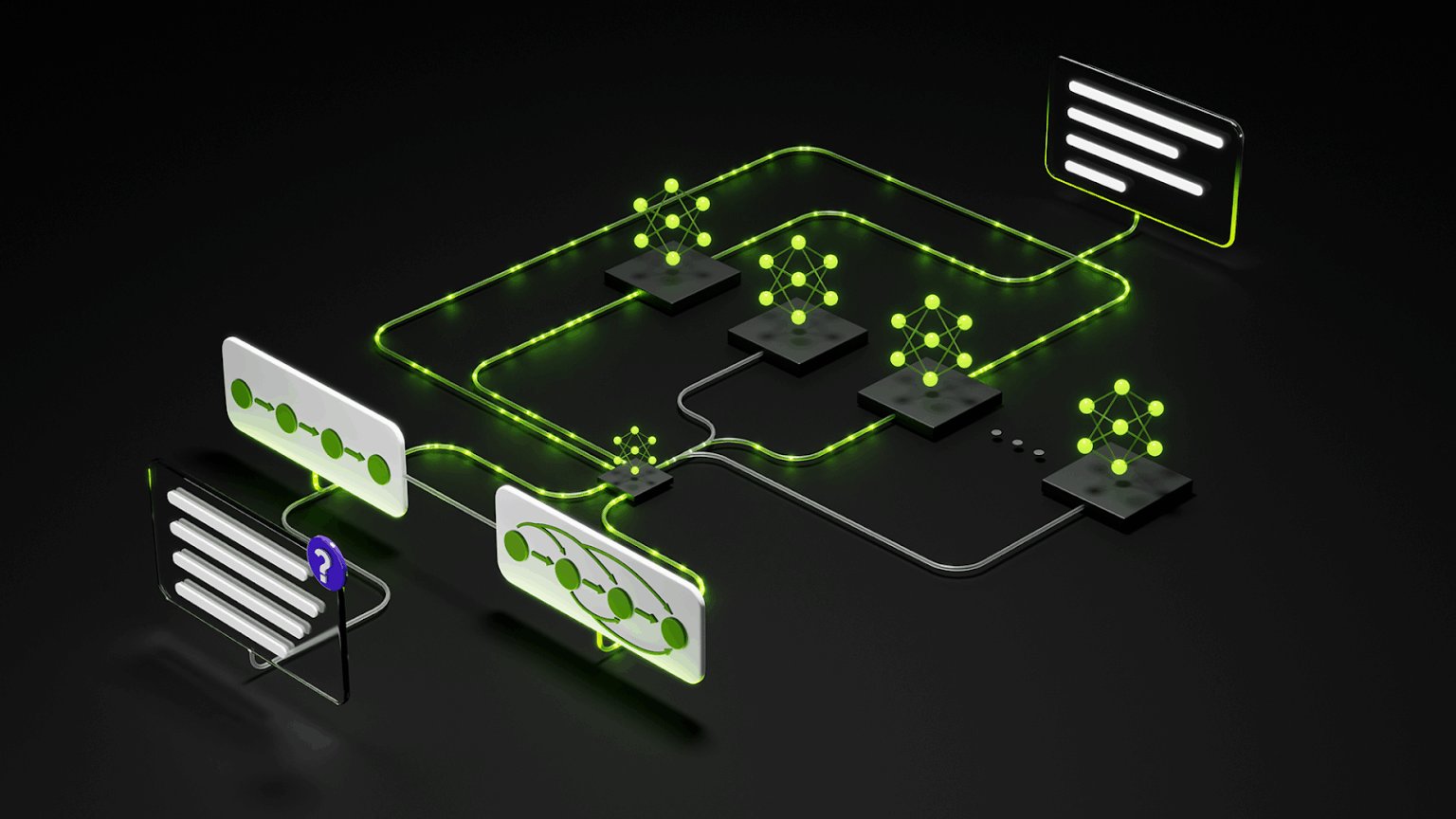

The Nemotron-3 lineup moves beyond standard chat-based LLMs into the emerging field of agentic AI. Built on a hybrid Mamba-Transformer Mixture-of-Experts architecture, the models are designed less for conversation and more for execution, prioritizing throughput, efficiency, and long-running autonomy.

That focus becomes clearer across the lineup. Nemotron-3 Nano, available immediately, acts as a high-speed worker for edge and lightweight agent tasks. The larger Super and Ultra variants, arriving in early 2026, extend that capability with a one-million-token context window aimed at planning, coordination, and multi-step reasoning.

Just as important as the models themselves is how NVIDIA is releasing them. This isn't a simple open-weights drop. NVIDIA is open-sourcing the surrounding development pipeline as well, including training datasets and reinforcement-learning tooling. The result is an ecosystem where developers are encouraged to build agentic systems that naturally align with NVIDIA’s hardware assumptions.

Once agents move from theory to production, however, model capability stops being the bottleneck. Coordination does.

Running large numbers of autonomous agents requires scheduling, prioritization, and fault tolerance at scale, which is where the SchedMD acquisition comes into focus. SchedMD maintains Slurm, the workload scheduler that already powers more than half of the world’s supercomputers.

NVIDIA has pledged to keep Slurm open-source and vendor-neutral, but the strategic advantage remains. If Nemotron provides the vehicles, Slurm controls the traffic flow. Agentic workloads are dynamic and unpredictable, and owning the orchestration layer gives NVIDIA the ability to tune how those workloads move across its Blackwell GPUs in ways competitors cannot easily match.

“We’re thrilled to join forces with NVIDIA, as this acquisition is the ultimate validation of Slurm’s critical role in the world’s most demanding HPC and AI environments,” said Danny Auble, CEO of SchedMD.

Seen together, these moves resemble a masterclass in vertical integration. By commoditizing the model layer through open tools, NVIDIA undercuts proprietary AI platforms. By controlling orchestration, it protects itself against hardware challengers.

For enterprises, the implication is straightforward. NVIDIA is no longer just supplying accelerators. It's steadily assembling the operating system for large-scale AI, shaping not just what models run, but how entire agent systems are built, scheduled, and deployed.